Need help finding source of latency

rvalkenburg

8 Posts

November 4, 2022, 5:18 pmQuote from rvalkenburg on November 4, 2022, 5:18 pmHello,

I am looking for help trying to track down the cause of some disk latency.

Our current setup:

PetaSAN 2.8

10 Node, 24 SSDs per node

40 cores per node

128GB of RAM per node

Bonded 10Gbit using LACP

Ceph and iSCSI traffic using bonded interfaces

iSCSI disks are presented to VMware ESXI 6.7 U3 Hosts

We get random bursts of a few seconds of latency. Any help would be appreciated.

Hello,

I am looking for help trying to track down the cause of some disk latency.

Our current setup:

PetaSAN 2.8

10 Node, 24 SSDs per node

40 cores per node

128GB of RAM per node

Bonded 10Gbit using LACP

Ceph and iSCSI traffic using bonded interfaces

iSCSI disks are presented to VMware ESXI 6.7 U3 Hosts

We get random bursts of a few seconds of latency. Any help would be appreciated.

admin

2,961 Posts

November 4, 2022, 8:33 pmQuote from admin on November 4, 2022, 8:33 pm1 What model of SSDs do you use ?

2 What controller do you use, do you have any RAID setup ?

3 Does this happen for all OSDs or just some of them ?

4 Does this happen during specific workloads like backups or it is all the time or is periodic ?

5 Are you using workloads other that iSCSI ?

6 Are you using any PetaSAN write cache ?

7 Can you disable volatile write cache on drives using hdparm or nvme commands

8 Can you temporarily switch off scrub and deep-scrub from maintenance tab and see if it affect this.

9 Run

iostat -dxt 2 > iostat.log

and see if you get high w_await and if so, look at the %util, wareq-sz, wkB/s

10 Do you see any errors or latency warnings in the osd logs in /var/log/ceph ?

11 Other useful info can be obtained from

ceph daemon osd.X perf dump (look at latency values)

ceph daemon osd.X dump_historic_ops (within 10 min of high value in chart)

1 What model of SSDs do you use ?

2 What controller do you use, do you have any RAID setup ?

3 Does this happen for all OSDs or just some of them ?

4 Does this happen during specific workloads like backups or it is all the time or is periodic ?

5 Are you using workloads other that iSCSI ?

6 Are you using any PetaSAN write cache ?

7 Can you disable volatile write cache on drives using hdparm or nvme commands

8 Can you temporarily switch off scrub and deep-scrub from maintenance tab and see if it affect this.

9 Run

iostat -dxt 2 > iostat.log

and see if you get high w_await and if so, look at the %util, wareq-sz, wkB/s

10 Do you see any errors or latency warnings in the osd logs in /var/log/ceph ?

11 Other useful info can be obtained from

ceph daemon osd.X perf dump (look at latency values)

ceph daemon osd.X dump_historic_ops (within 10 min of high value in chart)

rvalkenburg

8 Posts

November 4, 2022, 9:09 pmQuote from rvalkenburg on November 4, 2022, 9:09 pm

- Crucial MX500 1TB SSD

- AOC-S3008-L8i HBA (No Raid)

- It seems random

- Well we do hourly backups on most of our VMs (Total of 50 sitting on storage cluster)

- No only iSCSI

- No write cache

- Perhaps?

- Yes I can try that

- Highest W_await was 12.77 (most were between 0 and 5) , Highest was at %util was 51.8 (most were between 1 and 7), Highest wareq-sz was 77 (most were between 0 and 7, however I did see a few 10,13,25), Highest wkB/s was 3845 (most were between

- Yes some OSDs but not all (/var/lib/ceph/osd/ceph-20) log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s,)

11. Here is the perf dump from the same osd-20

"recoverystate_perf": {

"initial_latency": {

"avgcount": 187,

"sum": 0.038535012,

"avgtime": 0.000206069

},

"started_latency": {

"avgcount": 509,

"sum": 10300276.394202555,

"avgtime": 20236.299399219

},

"reset_latency": {

"avgcount": 696,

"sum": 0.091873184,

"avgtime": 0.000132001

},

"start_latency": {

"avgcount": 696,

"sum": 0.038111891,

"avgtime": 0.000054758

},

"primary_latency": {

"avgcount": 43,

"sum": 606856.540345384,

"avgtime": 14112.942798729

},

"peering_latency": {

"avgcount": 73,

"sum": 70.994576213,

"avgtime": 0.972528441

},

"backfilling_latency": {

"avgcount": 14,

"sum": 3777.554051844,

"avgtime": 269.825289417

},

"waitremotebackfillreserved_latency": {

"avgcount": 14,

"sum": 667.901702636,

"avgtime": 47.707264474

},

"waitlocalbackfillreserved_latency": {

"avgcount": 16,

"sum": 303.140102551,

"avgtime": 18.946256409

},

"notbackfilling_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"repnotrecovering_latency": {

"avgcount": 515,

"sum": 8863758.466705283,

"avgtime": 17211.181488748

},

"repwaitrecoveryreserved_latency": {

"avgcount": 1,

"sum": 0.000065646,

"avgtime": 0.000065646

},

"repwaitbackfillreserved_latency": {

"avgcount": 206,

"sum": 541972.319619940,

"avgtime": 2630.933590388

},

"reprecovering_latency": {

"avgcount": 145,

"sum": 48254.181497900,

"avgtime": 332.787458606

},

"activating_latency": {

"avgcount": 60,

"sum": 8.413385900,

"avgtime": 0.140223098

},

"waitlocalrecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"waitremoterecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovered_latency": {

"avgcount": 52,

"sum": 0.002363470,

"avgtime": 0.000045451

},

"clean_latency": {

"avgcount": 22,

"sum": 602058.899521991,

"avgtime": 27366.313614635

},

"active_latency": {

"avgcount": 30,

"sum": 606812.772259577,

"avgtime": 20227.092408652

},

"replicaactive_latency": {

"avgcount": 266,

"sum": 9453984.952967389,

"avgtime": 35541.296815666

},

"stray_latency": {

"avgcount": 623,

"sum": 239636.757881106,

"avgtime": 384.649691622

},

"getinfo_latency": {

"avgcount": 73,

"sum": 0.889038771,

"avgtime": 0.012178613

},

"getlog_latency": {

"avgcount": 73,

"sum": 0.167979837,

"avgtime": 0.002301093

},

"waitactingchange_latency": {

"avgcount": 5,

"sum": 4.828695894,

"avgtime": 0.965739178

},

"incomplete_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"down_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"getmissing_latency": {

"avgcount": 68,

"sum": 0.002043543,

"avgtime": 0.000030052

},

"waitupthru_latency": {

"avgcount": 60,

"sum": 69.932839343,

"avgtime": 1.165547322

},

"notrecovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

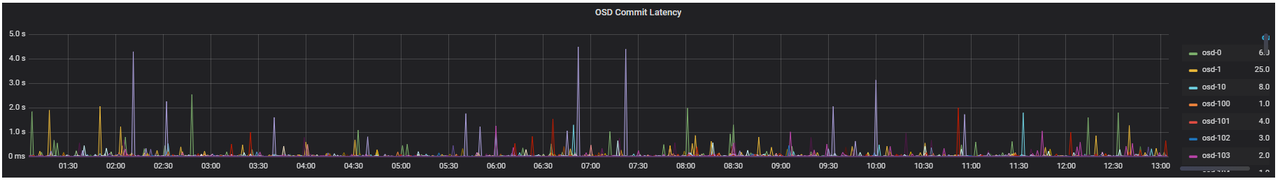

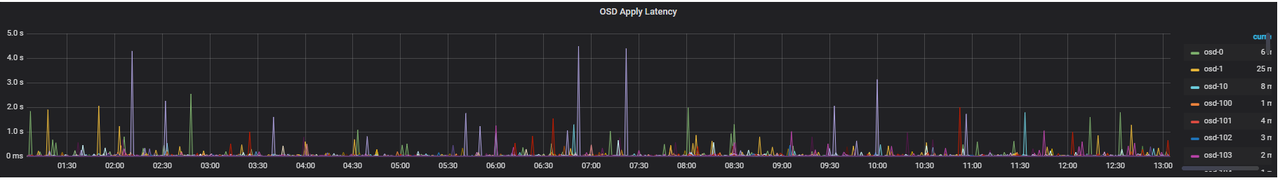

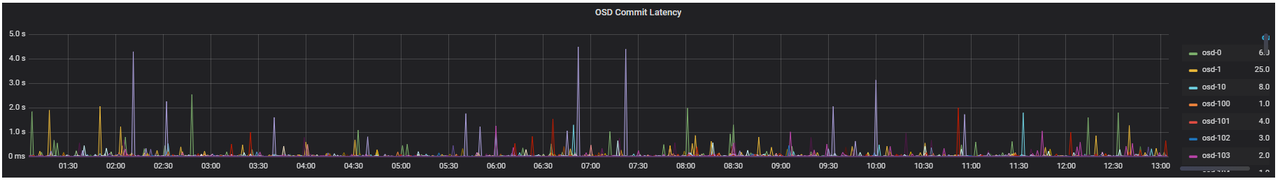

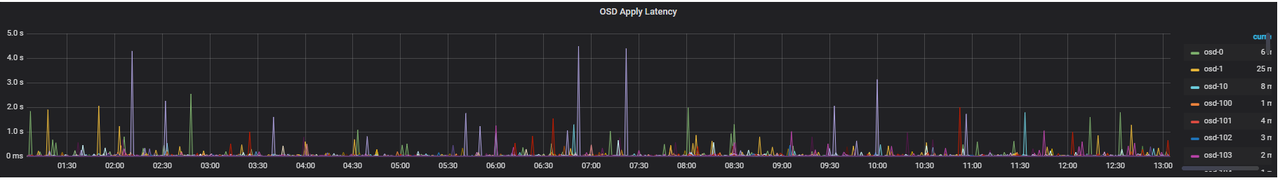

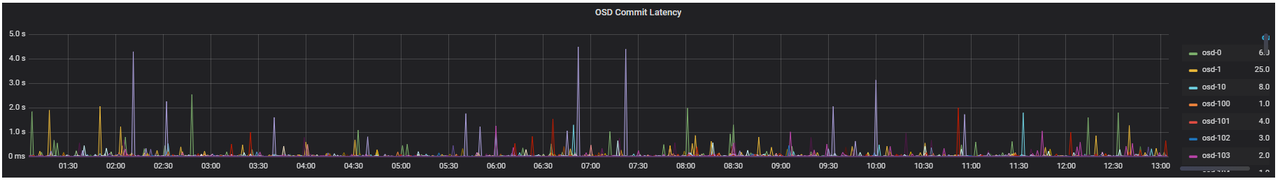

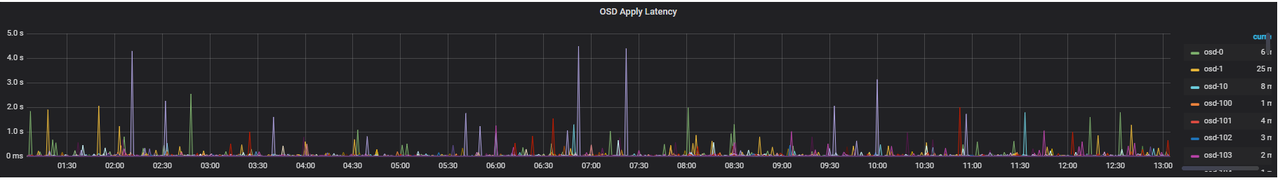

11b. My graph gets cut off so it's hard to pinpoint what OSD is showing up.

- Crucial MX500 1TB SSD

- AOC-S3008-L8i HBA (No Raid)

- It seems random

- Well we do hourly backups on most of our VMs (Total of 50 sitting on storage cluster)

- No only iSCSI

- No write cache

- Perhaps?

- Yes I can try that

- Highest W_await was 12.77 (most were between 0 and 5) , Highest was at %util was 51.8 (most were between 1 and 7), Highest wareq-sz was 77 (most were between 0 and 7, however I did see a few 10,13,25), Highest wkB/s was 3845 (most were between

- Yes some OSDs but not all (/var/lib/ceph/osd/ceph-20) log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s,)

11. Here is the perf dump from the same osd-20

"recoverystate_perf": {

"initial_latency": {

"avgcount": 187,

"sum": 0.038535012,

"avgtime": 0.000206069

},

"started_latency": {

"avgcount": 509,

"sum": 10300276.394202555,

"avgtime": 20236.299399219

},

"reset_latency": {

"avgcount": 696,

"sum": 0.091873184,

"avgtime": 0.000132001

},

"start_latency": {

"avgcount": 696,

"sum": 0.038111891,

"avgtime": 0.000054758

},

"primary_latency": {

"avgcount": 43,

"sum": 606856.540345384,

"avgtime": 14112.942798729

},

"peering_latency": {

"avgcount": 73,

"sum": 70.994576213,

"avgtime": 0.972528441

},

"backfilling_latency": {

"avgcount": 14,

"sum": 3777.554051844,

"avgtime": 269.825289417

},

"waitremotebackfillreserved_latency": {

"avgcount": 14,

"sum": 667.901702636,

"avgtime": 47.707264474

},

"waitlocalbackfillreserved_latency": {

"avgcount": 16,

"sum": 303.140102551,

"avgtime": 18.946256409

},

"notbackfilling_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"repnotrecovering_latency": {

"avgcount": 515,

"sum": 8863758.466705283,

"avgtime": 17211.181488748

},

"repwaitrecoveryreserved_latency": {

"avgcount": 1,

"sum": 0.000065646,

"avgtime": 0.000065646

},

"repwaitbackfillreserved_latency": {

"avgcount": 206,

"sum": 541972.319619940,

"avgtime": 2630.933590388

},

"reprecovering_latency": {

"avgcount": 145,

"sum": 48254.181497900,

"avgtime": 332.787458606

},

"activating_latency": {

"avgcount": 60,

"sum": 8.413385900,

"avgtime": 0.140223098

},

"waitlocalrecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"waitremoterecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovered_latency": {

"avgcount": 52,

"sum": 0.002363470,

"avgtime": 0.000045451

},

"clean_latency": {

"avgcount": 22,

"sum": 602058.899521991,

"avgtime": 27366.313614635

},

"active_latency": {

"avgcount": 30,

"sum": 606812.772259577,

"avgtime": 20227.092408652

},

"replicaactive_latency": {

"avgcount": 266,

"sum": 9453984.952967389,

"avgtime": 35541.296815666

},

"stray_latency": {

"avgcount": 623,

"sum": 239636.757881106,

"avgtime": 384.649691622

},

"getinfo_latency": {

"avgcount": 73,

"sum": 0.889038771,

"avgtime": 0.012178613

},

"getlog_latency": {

"avgcount": 73,

"sum": 0.167979837,

"avgtime": 0.002301093

},

"waitactingchange_latency": {

"avgcount": 5,

"sum": 4.828695894,

"avgtime": 0.965739178

},

"incomplete_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"down_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"getmissing_latency": {

"avgcount": 68,

"sum": 0.002043543,

"avgtime": 0.000030052

},

"waitupthru_latency": {

"avgcount": 60,

"sum": 69.932839343,

"avgtime": 1.165547322

},

"notrecovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

11b. My graph gets cut off so it's hard to pinpoint what OSD is showing up.

admin

2,961 Posts

November 4, 2022, 9:59 pmQuote from admin on November 4, 2022, 9:59 pm1)

MX500 is not highly rated with Ceph, it is a consumer model SSD.

7) you could use

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

done

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done

10)

log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s

This is not good, can you try the following and see if it helps:

ceph config set osd.* bluefs_buffered_io true

also do you see an kernel dmesg messages relating to the drives at the time of latency spikes ?

In general for VMWare backups, reommended:

use thick provisioned/eager zero vmdk disks

set MaxIoSize param to 512k as per our guide

1)

MX500 is not highly rated with Ceph, it is a consumer model SSD.

7) you could use

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

done

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done

10)

log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s

This is not good, can you try the following and see if it helps:

ceph config set osd.* bluefs_buffered_io true

also do you see an kernel dmesg messages relating to the drives at the time of latency spikes ?

In general for VMWare backups, reommended:

use thick provisioned/eager zero vmdk disks

set MaxIoSize param to 512k as per our guide

rvalkenburg

8 Posts

November 7, 2022, 1:59 pmQuote from rvalkenburg on November 7, 2022, 1:59 pmMX500 is not highly rated with Ceph, it is a consumer model SSD.

I'm getting that impression now yes. Would a Samsung Evo 870 Pro work better?

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

done

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done

I ran this on all the nodes, I'll report back if disabling the drive cache helped/fixed the issue.

ceph config set osd.* bluefs_buffered_io true

bluefs_buffered_io true is already set in my ceph config by default it seems.

I did follow the guide and configured the iSCSI software interfaces per spec.

Thank you for all the help so far. I really appreciate it.

MX500 is not highly rated with Ceph, it is a consumer model SSD.

I'm getting that impression now yes. Would a Samsung Evo 870 Pro work better?

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

done

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done

I ran this on all the nodes, I'll report back if disabling the drive cache helped/fixed the issue.

ceph config set osd.* bluefs_buffered_io true

bluefs_buffered_io true is already set in my ceph config by default it seems.

I did follow the guide and configured the iSCSI software interfaces per spec.

Thank you for all the help so far. I really appreciate it.

admin

2,961 Posts

November 7, 2022, 9:24 pmQuote from admin on November 7, 2022, 9:24 pmNo Evo 870 Pro will not be better, probably worse than MX500. There are many good drives recommended for Ceph, but they are not consumer grade drives. You can search yourself for recommended drives or i can recommend if you wish.

I recommend you try the different points in the prev posts and see if any has an effect.

Can you also check your memory % util and make sure you have no memory issues

No Evo 870 Pro will not be better, probably worse than MX500. There are many good drives recommended for Ceph, but they are not consumer grade drives. You can search yourself for recommended drives or i can recommend if you wish.

I recommend you try the different points in the prev posts and see if any has an effect.

Can you also check your memory % util and make sure you have no memory issues

rvalkenburg

8 Posts

November 8, 2022, 1:59 pmQuote from rvalkenburg on November 8, 2022, 1:59 pmNo consumer drives got it. So basically enterprise-grade or data-center-grade drives only, got it.

Right so about the previous tasks, you asked me to check:

- Ram utilization is 79% for all nodes.

- The script to turn off the write cache on the drives did help quite a bit. The latency has reduced significantly

- Changing the bluefs_buffered_io true had no effect, I think it was already enabled by default.

No consumer drives got it. So basically enterprise-grade or data-center-grade drives only, got it.

Right so about the previous tasks, you asked me to check:

- Ram utilization is 79% for all nodes.

- The script to turn off the write cache on the drives did help quite a bit. The latency has reduced significantly

- Changing the bluefs_buffered_io true had no effect, I think it was already enabled by default.

admin

2,961 Posts

November 8, 2022, 8:25 pmQuote from admin on November 8, 2022, 8:25 pmGood things are better. I would also try the scrub disable as pointed above just to rule out issue relating to scrub.

Good things are better. I would also try the scrub disable as pointed above just to rule out issue relating to scrub.

Last edited on November 8, 2022, 8:25 pm by admin · #8

Need help finding source of latency

rvalkenburg

8 Posts

Quote from rvalkenburg on November 4, 2022, 5:18 pmHello,

I am looking for help trying to track down the cause of some disk latency.

Our current setup:

PetaSAN 2.8

10 Node, 24 SSDs per node

40 cores per node

128GB of RAM per node

Bonded 10Gbit using LACPCeph and iSCSI traffic using bonded interfaces

iSCSI disks are presented to VMware ESXI 6.7 U3 Hosts

We get random bursts of a few seconds of latency. Any help would be appreciated.

Hello,

I am looking for help trying to track down the cause of some disk latency.

Our current setup:

PetaSAN 2.8

10 Node, 24 SSDs per node

40 cores per node

128GB of RAM per node

Bonded 10Gbit using LACP

Ceph and iSCSI traffic using bonded interfaces

iSCSI disks are presented to VMware ESXI 6.7 U3 Hosts

We get random bursts of a few seconds of latency. Any help would be appreciated.

admin

2,961 Posts

Quote from admin on November 4, 2022, 8:33 pm1 What model of SSDs do you use ?

2 What controller do you use, do you have any RAID setup ?

3 Does this happen for all OSDs or just some of them ?

4 Does this happen during specific workloads like backups or it is all the time or is periodic ?

5 Are you using workloads other that iSCSI ?

6 Are you using any PetaSAN write cache ?

7 Can you disable volatile write cache on drives using hdparm or nvme commands

8 Can you temporarily switch off scrub and deep-scrub from maintenance tab and see if it affect this.

9 Run

iostat -dxt 2 > iostat.log

and see if you get high w_await and if so, look at the %util, wareq-sz, wkB/s

10 Do you see any errors or latency warnings in the osd logs in /var/log/ceph ?

11 Other useful info can be obtained from

ceph daemon osd.X perf dump (look at latency values)

ceph daemon osd.X dump_historic_ops (within 10 min of high value in chart)

1 What model of SSDs do you use ?

2 What controller do you use, do you have any RAID setup ?

3 Does this happen for all OSDs or just some of them ?

4 Does this happen during specific workloads like backups or it is all the time or is periodic ?

5 Are you using workloads other that iSCSI ?

6 Are you using any PetaSAN write cache ?

7 Can you disable volatile write cache on drives using hdparm or nvme commands

8 Can you temporarily switch off scrub and deep-scrub from maintenance tab and see if it affect this.

9 Run

iostat -dxt 2 > iostat.log

and see if you get high w_await and if so, look at the %util, wareq-sz, wkB/s

10 Do you see any errors or latency warnings in the osd logs in /var/log/ceph ?

11 Other useful info can be obtained from

ceph daemon osd.X perf dump (look at latency values)

ceph daemon osd.X dump_historic_ops (within 10 min of high value in chart)

rvalkenburg

8 Posts

Quote from rvalkenburg on November 4, 2022, 9:09 pm

- Crucial MX500 1TB SSD

- AOC-S3008-L8i HBA (No Raid)

- It seems random

- Well we do hourly backups on most of our VMs (Total of 50 sitting on storage cluster)

- No only iSCSI

- No write cache

- Perhaps?

- Yes I can try that

- Highest W_await was 12.77 (most were between 0 and 5) , Highest was at %util was 51.8 (most were between 1 and 7), Highest wareq-sz was 77 (most were between 0 and 7, however I did see a few 10,13,25), Highest wkB/s was 3845 (most were between

- Yes some OSDs but not all (/var/lib/ceph/osd/ceph-20) log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s,)

11. Here is the perf dump from the same osd-20

"recoverystate_perf": {

"initial_latency": {

"avgcount": 187,

"sum": 0.038535012,

"avgtime": 0.000206069

},

"started_latency": {

"avgcount": 509,

"sum": 10300276.394202555,

"avgtime": 20236.299399219

},

"reset_latency": {

"avgcount": 696,

"sum": 0.091873184,

"avgtime": 0.000132001

},

"start_latency": {

"avgcount": 696,

"sum": 0.038111891,

"avgtime": 0.000054758

},

"primary_latency": {

"avgcount": 43,

"sum": 606856.540345384,

"avgtime": 14112.942798729

},

"peering_latency": {

"avgcount": 73,

"sum": 70.994576213,

"avgtime": 0.972528441

},

"backfilling_latency": {

"avgcount": 14,

"sum": 3777.554051844,

"avgtime": 269.825289417

},

"waitremotebackfillreserved_latency": {

"avgcount": 14,

"sum": 667.901702636,

"avgtime": 47.707264474

},

"waitlocalbackfillreserved_latency": {

"avgcount": 16,

"sum": 303.140102551,

"avgtime": 18.946256409

},

"notbackfilling_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"repnotrecovering_latency": {

"avgcount": 515,

"sum": 8863758.466705283,

"avgtime": 17211.181488748

},

"repwaitrecoveryreserved_latency": {

"avgcount": 1,

"sum": 0.000065646,

"avgtime": 0.000065646

},

"repwaitbackfillreserved_latency": {

"avgcount": 206,

"sum": 541972.319619940,

"avgtime": 2630.933590388

},

"reprecovering_latency": {

"avgcount": 145,

"sum": 48254.181497900,

"avgtime": 332.787458606

},

"activating_latency": {

"avgcount": 60,

"sum": 8.413385900,

"avgtime": 0.140223098

},

"waitlocalrecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"waitremoterecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovered_latency": {

"avgcount": 52,

"sum": 0.002363470,

"avgtime": 0.000045451

},

"clean_latency": {

"avgcount": 22,

"sum": 602058.899521991,

"avgtime": 27366.313614635

},

"active_latency": {

"avgcount": 30,

"sum": 606812.772259577,

"avgtime": 20227.092408652

},

"replicaactive_latency": {

"avgcount": 266,

"sum": 9453984.952967389,

"avgtime": 35541.296815666

},

"stray_latency": {

"avgcount": 623,

"sum": 239636.757881106,

"avgtime": 384.649691622

},

"getinfo_latency": {

"avgcount": 73,

"sum": 0.889038771,

"avgtime": 0.012178613

},

"getlog_latency": {

"avgcount": 73,

"sum": 0.167979837,

"avgtime": 0.002301093

},

"waitactingchange_latency": {

"avgcount": 5,

"sum": 4.828695894,

"avgtime": 0.965739178

},

"incomplete_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"down_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"getmissing_latency": {

"avgcount": 68,

"sum": 0.002043543,

"avgtime": 0.000030052

},

"waitupthru_latency": {

"avgcount": 60,

"sum": 69.932839343,

"avgtime": 1.165547322

},

"notrecovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

11b. My graph gets cut off so it's hard to pinpoint what OSD is showing up.

- Crucial MX500 1TB SSD

- AOC-S3008-L8i HBA (No Raid)

- It seems random

- Well we do hourly backups on most of our VMs (Total of 50 sitting on storage cluster)

- No only iSCSI

- No write cache

- Perhaps?

- Yes I can try that

- Highest W_await was 12.77 (most were between 0 and 5) , Highest was at %util was 51.8 (most were between 1 and 7), Highest wareq-sz was 77 (most were between 0 and 7, however I did see a few 10,13,25), Highest wkB/s was 3845 (most were between

- Yes some OSDs but not all (/var/lib/ceph/osd/ceph-20) log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s,)

11. Here is the perf dump from the same osd-20

"recoverystate_perf": {

"initial_latency": {

"avgcount": 187,

"sum": 0.038535012,

"avgtime": 0.000206069

},

"started_latency": {

"avgcount": 509,

"sum": 10300276.394202555,

"avgtime": 20236.299399219

},

"reset_latency": {

"avgcount": 696,

"sum": 0.091873184,

"avgtime": 0.000132001

},

"start_latency": {

"avgcount": 696,

"sum": 0.038111891,

"avgtime": 0.000054758

},

"primary_latency": {

"avgcount": 43,

"sum": 606856.540345384,

"avgtime": 14112.942798729

},

"peering_latency": {

"avgcount": 73,

"sum": 70.994576213,

"avgtime": 0.972528441

},

"backfilling_latency": {

"avgcount": 14,

"sum": 3777.554051844,

"avgtime": 269.825289417

},

"waitremotebackfillreserved_latency": {

"avgcount": 14,

"sum": 667.901702636,

"avgtime": 47.707264474

},

"waitlocalbackfillreserved_latency": {

"avgcount": 16,

"sum": 303.140102551,

"avgtime": 18.946256409

},

"notbackfilling_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"repnotrecovering_latency": {

"avgcount": 515,

"sum": 8863758.466705283,

"avgtime": 17211.181488748

},

"repwaitrecoveryreserved_latency": {

"avgcount": 1,

"sum": 0.000065646,

"avgtime": 0.000065646

},

"repwaitbackfillreserved_latency": {

"avgcount": 206,

"sum": 541972.319619940,

"avgtime": 2630.933590388

},

"reprecovering_latency": {

"avgcount": 145,

"sum": 48254.181497900,

"avgtime": 332.787458606

},

"activating_latency": {

"avgcount": 60,

"sum": 8.413385900,

"avgtime": 0.140223098

},

"waitlocalrecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"waitremoterecoveryreserved_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"recovered_latency": {

"avgcount": 52,

"sum": 0.002363470,

"avgtime": 0.000045451

},

"clean_latency": {

"avgcount": 22,

"sum": 602058.899521991,

"avgtime": 27366.313614635

},

"active_latency": {

"avgcount": 30,

"sum": 606812.772259577,

"avgtime": 20227.092408652

},

"replicaactive_latency": {

"avgcount": 266,

"sum": 9453984.952967389,

"avgtime": 35541.296815666

},

"stray_latency": {

"avgcount": 623,

"sum": 239636.757881106,

"avgtime": 384.649691622

},

"getinfo_latency": {

"avgcount": 73,

"sum": 0.889038771,

"avgtime": 0.012178613

},

"getlog_latency": {

"avgcount": 73,

"sum": 0.167979837,

"avgtime": 0.002301093

},

"waitactingchange_latency": {

"avgcount": 5,

"sum": 4.828695894,

"avgtime": 0.965739178

},

"incomplete_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"down_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

},

"getmissing_latency": {

"avgcount": 68,

"sum": 0.002043543,

"avgtime": 0.000030052

},

"waitupthru_latency": {

"avgcount": 60,

"sum": 69.932839343,

"avgtime": 1.165547322

},

"notrecovering_latency": {

"avgcount": 0,

"sum": 0.000000000,

"avgtime": 0.000000000

11b. My graph gets cut off so it's hard to pinpoint what OSD is showing up.

admin

2,961 Posts

Quote from admin on November 4, 2022, 9:59 pm1)

MX500 is not highly rated with Ceph, it is a consumer model SSD.7) you could use

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

donefor DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done10)

log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367sThis is not good, can you try the following and see if it helps:

ceph config set osd.* bluefs_buffered_io true

also do you see an kernel dmesg messages relating to the drives at the time of latency spikes ?

In general for VMWare backups, reommended:

use thick provisioned/eager zero vmdk disks

set MaxIoSize param to 512k as per our guide

1)

MX500 is not highly rated with Ceph, it is a consumer model SSD.

7) you could use

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

donefor DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done

10)

log_latency_fn slow operation observed for _txc_committed_kv, latency = 5.742069367s

This is not good, can you try the following and see if it helps:

ceph config set osd.* bluefs_buffered_io true

also do you see an kernel dmesg messages relating to the drives at the time of latency spikes ?

In general for VMWare backups, reommended:

use thick provisioned/eager zero vmdk disks

set MaxIoSize param to 512k as per our guide

rvalkenburg

8 Posts

Quote from rvalkenburg on November 7, 2022, 1:59 pmMX500 is not highly rated with Ceph, it is a consumer model SSD.

I'm getting that impression now yes. Would a Samsung Evo 870 Pro work better?

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

donefor DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

doneI ran this on all the nodes, I'll report back if disabling the drive cache helped/fixed the issue.

ceph config set osd.* bluefs_buffered_io true

bluefs_buffered_io true is already set in my ceph config by default it seems.

I did follow the guide and configured the iSCSI software interfaces per spec.

Thank you for all the help so far. I really appreciate it.

MX500 is not highly rated with Ceph, it is a consumer model SSD.

I'm getting that impression now yes. Would a Samsung Evo 870 Pro work better?

#! /bin/bash

for DEVICE_PATH in $(find /sys/block/* | grep -E '\/(sd)' )

do

DEVICE=${DEVICE_PATH##*/}

/sbin/hdparm -W 0 /dev/$DEVICE > /dev/null 2>&1

donefor DEVICE_PATH in $(find /sys/block/* | grep -E '\/(nvme)' )

do

DEVICE=${DEVICE_PATH##*/}

nvme set-feature /dev/$DEVICE -f 0x6 -v 0 > /dev/null 2>&1

done

I ran this on all the nodes, I'll report back if disabling the drive cache helped/fixed the issue.

ceph config set osd.* bluefs_buffered_io true

bluefs_buffered_io true is already set in my ceph config by default it seems.

I did follow the guide and configured the iSCSI software interfaces per spec.

Thank you for all the help so far. I really appreciate it.

admin

2,961 Posts

Quote from admin on November 7, 2022, 9:24 pmNo Evo 870 Pro will not be better, probably worse than MX500. There are many good drives recommended for Ceph, but they are not consumer grade drives. You can search yourself for recommended drives or i can recommend if you wish.

I recommend you try the different points in the prev posts and see if any has an effect.

Can you also check your memory % util and make sure you have no memory issues

No Evo 870 Pro will not be better, probably worse than MX500. There are many good drives recommended for Ceph, but they are not consumer grade drives. You can search yourself for recommended drives or i can recommend if you wish.

I recommend you try the different points in the prev posts and see if any has an effect.

Can you also check your memory % util and make sure you have no memory issues

rvalkenburg

8 Posts

Quote from rvalkenburg on November 8, 2022, 1:59 pmNo consumer drives got it. So basically enterprise-grade or data-center-grade drives only, got it.

Right so about the previous tasks, you asked me to check:

- Ram utilization is 79% for all nodes.

- The script to turn off the write cache on the drives did help quite a bit. The latency has reduced significantly

- Changing the bluefs_buffered_io true had no effect, I think it was already enabled by default.

No consumer drives got it. So basically enterprise-grade or data-center-grade drives only, got it.

Right so about the previous tasks, you asked me to check:

- Ram utilization is 79% for all nodes.

- The script to turn off the write cache on the drives did help quite a bit. The latency has reduced significantly

- Changing the bluefs_buffered_io true had no effect, I think it was already enabled by default.

admin

2,961 Posts

Quote from admin on November 8, 2022, 8:25 pmGood things are better. I would also try the scrub disable as pointed above just to rule out issue relating to scrub.

Good things are better. I would also try the scrub disable as pointed above just to rule out issue relating to scrub.